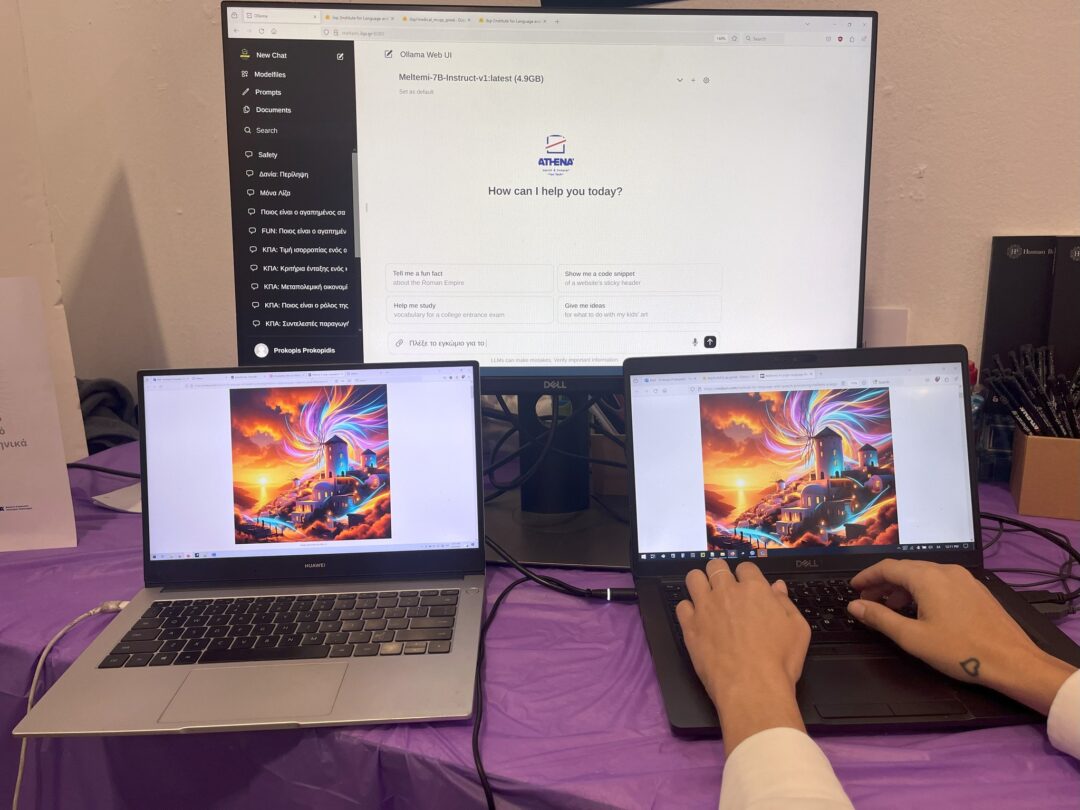

‘Meltemi’ is the first Greek Large Language Model (LLM), trained by the Institute for Language and Speech Processing of the Athena Research & Innovation Center on a corpus of high-quality Greek texts.

Large Language Models (LLMs) have revolutionized the field of AI, opening up new opportunities for research and industry applications. It been shown that open LLMs are competitive alternatives to commercial, siloed solutions and their utilization can provide a higher level of control over the development of safe and application-optimized models.

However, due to the sheer volume of data required, most developed open LLMs have been trained on vast, mostly English, monolingual datasets (e.g., Dolma), limiting their performance in other languages. Recently, there have been efforts to extend the capabilities of open LLMs to other languages (e.g., LeoLM for German, Aguila for Spanish, etc.).

To address these challenges the Institute for Language and Speech Processing (ILSP) developed and released Meltemi, the first LLM for the Greek language. Meltemi is developed as a bilingual model; while being highly proficient in English, it has been extended to understand and generate fluent text in Modern Greek. Meltemi was built on top of the Mistral-7B language model, which is trained on a large corpus of English text, so ILSP extended it with added proficiency for the Greek language, by utilizing a large corpus consisting of approximately 40 billion tokens (i.e. words).

Out of these 40 billion tokens, 28.5 billion are Greek, derived from publicly available resources. Furthermore, to ensure that the model has bilingual capabilities, ISLP used additional sub-corpora with 10.5 billion tokens of English texts and a parallel Greek-English dataset of 600 million tokens. This corpus has been processed, filtered, and deduplicated to ensure data quality.

There two variants of Meltemi: the foundation model, and a model which can be used for chat applications.

As for the model’s name, “Meltemi is a strong, dry north wind that blows across the Aegean Sea, during the summer months, with its peak usually occurring in July and August. Its intensity can vary from gentle breezes to strong gales, making it both a vital aspect of local weather and a significant factor in the region’s climate.” The name ‘Meltemi’ was chosen to symbolize the feeling of freshness and rejuvenation this cool breeze brings.

The ILSP’s Intradisciplinary History

The Institute for Language and Speech Processing (ILSP) was established in 1985 by of a small team of scientists working on the EUROTRA machine translation project. This team, composed of linguists and information technologists and led by NTUA Professor George Carayannis, gradually grew both in size and scope of activities.

In 1991, ILSP was officially recognized as a Research Institute under the auspices of the General Secretariat of Research and Technology In 2003, ILSP became part of the newly established Athena Research and Innovation Center in Information, Communication and Knowledge Technologies (Athena R.C.).

As, Nasos Katsamanis, principal researcher and deputy director at ILSP, tells it to newspaper “Kathimerini,” the team that built Meltemi came together thanks to one savage tweet:: “About a year ago, colleagues from the U.S. came here for a seminar. They were telling us that they are working on new language models, asking us we are doing here for the Greek language. This discussion coincided with a very scathing comment on Twitter from someone well-known in the field who wrote: “What are they doing at the Athena Research Center, won’t they finally produce a Greek language model?” So, after all that, here we are with our Meltemi”.

With the Institute’s history of bringing together researchers from various fields -such as linguistics and informational technology- to work on language technologies, it was always a question of “If not us, then who?” However, as reported by Kathimerini’s, for such a project to take shape, four key elements were necessary:

- Linguistic data, meaning texts containing billions of words

- Machine power, in this case Amazon’s cloud computing infrastructure accessed through GRNET – National Infrastructures for Research and Technology

- Algorithms

- Expertise

It is evident that without the Institute’s data collection projects that have been active since the 1990s, the team would not have been able to create the first Greek Large Language Model

30 Billion Greek Words

“We collected numerous Greek texts, homogenized them, and prepared them. We removed duplicate texts and those with toxic, racist, and sexist content. The Greek words we have collected and included in a large training corpus total 30 billion, although some words are repeated. Of these, eight million were collected within 12 months,” explains researcher Prokopis Prokopidis.

The undertaking was further complicated by two factors: cost and time. In this case, a three-day mistake would have cost them more than $7,000.

“The team had reserved the cloud computing infrastructure for a specific period, and the usage cost amounted to $100 per hour. There was a moment when we realized that a mistake would cost us dearly. In the end, we fixed it, but I will never forget how anxious I felt,” recalls researcher Georgios Paraskevopoulos.

Due to the fact that the choice of training data is related to the results the user will receive, the team excluded from the beginning the huge sources of data coming from Greek social media, choosing to use data from theses, books, school textbooks, the Greek legislation and everything else that was free to use copyright.

“It’s actually very difficult to make a model in a language that doesn’t have as much appeal as English. Consider that a Portuguese can understand a Spanish when they speak, so you can adapt Portuguese to a Spanish model, just like Bulgarian to a Russian one. This is not the case for Greek. It is a language spoken by about 15 million people on the planet, that is less than 0.5%. So we had to do it from the scratch with great attention to getting the data right,” Georgios Paraskevopoulos stresses.

Meltemi Uses

Although Meltemi is available as an open model for research and commercial purposes, the general public cannot yet try it online – like they can with ChatGPT, as the team still needs to complete some technical issues. Small and medium-sized businesses are already using customized versions of it to build specific products in the health, education, tourism and culture sectors.

Giving a concrete example of where Meltemi can be used, scientist Maria Yagou tells “Kathimerini” that the institute is currently creating a digital assistant based on Meltemi. In an educational setting, the digital assistant will be able to chat with students, solve questions on their teaching material, create exercises according to their needs, explain terms and even simplify some texts from their textbooks. In the health sector it could decode the doctor’s medial reports or even create a report upon the user´s request.

Members of the team behind Meltemi

Vasilis Katsouros: “We are here for the digital survival of the Greek language”

One of the challenges we are discussing is the timelessness of the Greek language. That is, how can a language model speak from ancient Greek, to dialects and the colloquial language. This is a complex undertaking. But we are here to support the digital survival of the Greek language.

Meltemi can be applied to anything you can imagine, from the field of economics to the field of commerce, as long as the fundamental model is adapted to specific data from the field in question.

Prokopis Prokopidis: “Our role is to support Greek with new technologies”

Building Meltemi was a challenge because Greek has a different alphabet, so it’s more difficult to transfer knowledge from other languages or dialects. Apart from the fact that there are few people who speak, write and produce Greek, there are also few people who are interested in the protection of the language.

I believe that the role of the Speech Processing Institute at the Athena Research Center is to support the Greek language with new technologies.

Nasos Katsamanis: “We didn’t have the funding that OpenAI had”

We are talking to at least 50 different companies and organizations right now to implement Meltemi into their products or services. The techniques OpenAI followed were not unknown to the community at large, but the important thing was that they had multi-million funding. We didn’t have anything similar.

Maria Yagou: Meltemi is a key tool for SMEs

We are currently working with small and medium-sized businesses to build specific products in the health, education, tourism and culture sectors. There are also many SMEs who come to us to teach them the basics of AI, as all this change has caught them somewhat unprepared.

At the same time, we are working on the development of digital assistants in specific services that will aim to guide and inform the citizen on various issues.

Sokratis Sofianopoulos: “We want Meltemi to be accessible to everyone”

Meltemi is open source and anyone can download and use it for research purposes as well as for developing innovative applications. We aim for the data we use in the future to be free to use copyright.

Stelios Piperidis: “Language models need continuous training”

Training language models is not something you do once and you’re done. ChatGPT said in its first seven months of operation that its knowledge was up to September 2021 because the training data was up to that point.

Consider that when the pandemic came our own voice systems could not recognize the word “coronavirus”, “SARS-COV-2”, etc. We had to train the systems to learn these words, just as we ourselves learned them.

I.L. with information from ILSP and Kathimerini

TAGS: ARTIFICIAL INTELLIGENCE | GREEK LANGUAGE | INFORMATION TECHNOLOGY | INNOVATION | RESEARCH